While there’s a lot of AI hate going on the interwebs, I feel like most of it is heavily misdirected on the tool itself rather than on the people that “create” using said tools and taking advantage of them.

Don’t get me wrong, we are humans and we’re meant to live and progress using tools, it’s in the nature of living beings. Other animals use tools as well, bonobos use leaves as umbrellas, crows use sticks to retrieve food, cranes throw bread in the water to attract fish, to name only a few examples.

So what is all the deal about AI? First of all, we need to understand that there is no AI, or just not yet. Large Language Models (or LLMs) are just advanced machine learning models which are created with the purpose of processing tasks like language or image generation. At the core, it’s just advanced applied statistics. Think of teaching a computer that after 1 and 2 and 3 always comes 4. When you’ll type 1-2-3, it will always autocomplete the sentence with “-4”, because it’s what’s been trained to know. On an abstract level, it’s as simple as that. And then, the more data you feed the LLM, and the more processing power you throw at it, you can give it more complex data to work with, and it can generate more advanced things (images, video, realistic stuff, less errors), but in the end it’s still advanced statistics. Probabilities. If you want to read more on this, start with the Markov chain, it’s basically Chat GPT invented in 1906, even before the computer.

But why are people using it this much and why is that a bad thing? Well, we’ve been using various kinds of LLMs, in a technological form or the other for a long long time. Think of your smartphone autocomplete functionality, that’s the first one that comes to mind. Search engine suggestions as you type in the search bar is also one use of this. They all are being fed user data so they can predict better the possibility of the next word, and if you want to search “bill” it will suggest you either “billie eilish” or “bill gates”, depending on your search patterns (in reality depends on the information you used to feed the LLM).

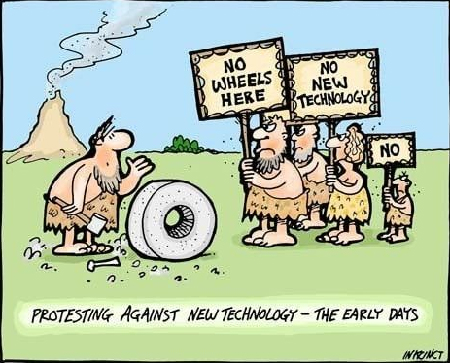

We need to understand that every time humanity has made technological leaps, it was done because of a combination of factors: people needed to do stuff faster, to consume less resources, to get more free time from the day, to ease the manual labor, and many others. And often, each of these technological leaps was met with frowns by the majority of the general public, which is a normal thing, because every disrupting technology that threatens the status quo will be met with fear and resistance. Scribes were hating the newly-invented typographic process, farmers said that tractors are only a marginal advantage over the classic ox-pulled cart, why would you need a telephone in your home when you can go to the post office to send a telegram? Also by “disrupting technology” I’m not talking about Uber and Glovo, those things are not progress, they’re just some ways for some rich dudes to get richer.

Instead of hating the technology, we need to take a step back and start judging the way it’s used. I say stop pointing at the technology and start questioning the people who use it, and for the way they use it. I’ve used LLMs in the past for various small tasks, and in 50% of the cases it’s flawless, in others it’s completely off the bat. But I never was afraid to admit to use AI for something. I never took credit for the things generated by Midjourney, I’ve never submitted a paper in school that was written entirely by ChatGPT, I never used Gemini to make websites for me. I’ve never misled my readers, teachers, friends or general public with something where my only input was “/generate Lana del Rey as the president of France wielding dual swords made out of baguettes” and then claimed I did it myself using Photoshop and my painting skills. Imagine what would you think if Johann Gutenberg made over night 500 copies of the Bible and then say that he wrote them by hand. I’ve seen people put their names onto colouring books which were created using Midjourney. I’ve seen people create music with AI, and I’m not talking about generating stems and mixing them up, I’m talking about straight “make me a 3:30 song similar to Sandstorm”, or all that “Hit 'Em Up by 2Pac but in a redneck style”. Stop lying to yourself, you’re not an artist, you’re just a prompt generator and you’re not adding value to the world, you’re just creating more slop that people have to sift through.

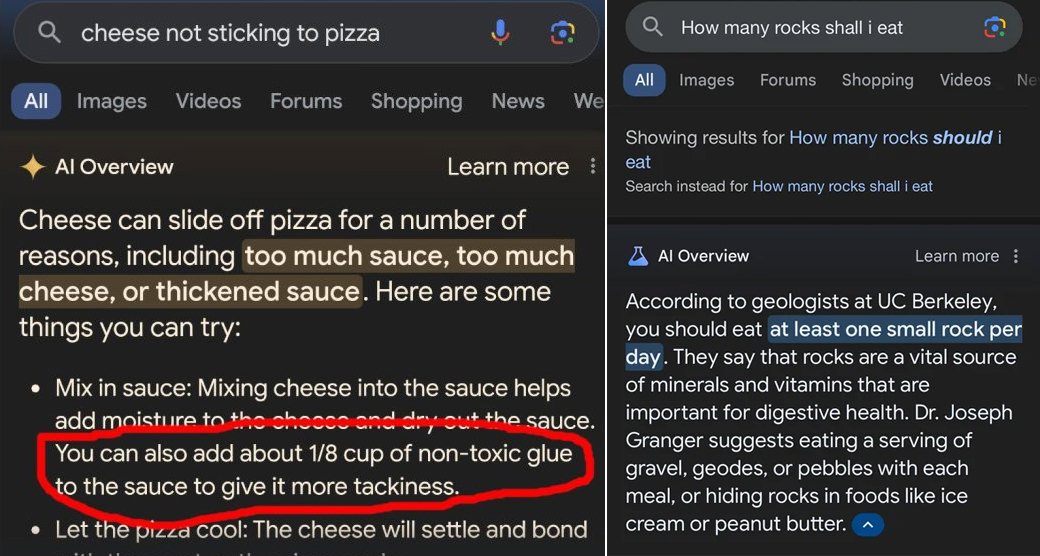

I understand that this “AI” is the mainstream technological leap of our generation, but we need to be more responsible when using and we need to be more mature with what we’re doing with the result of our prompts. While LLMs can ease our lives by a lot, we still need to use our critical thinking capabilities to process the information they provide us, rather than just spew everything they throw at us as undeniable fact. Or else we’re going to drive off bridges because Apple Maps says so, or even better (worse?) put glue in our pizza sauce because Google said it’s fine.

I feel we’ve already peaked from a technological point of view, and we’re slowly going towards the point we let technology take the wheel and we’re just going to point it where to go and what to do. We’ve evolved from making Midjourney generate images of a frog on the point where AI can fully write a summary on basically anything, after making research online, bypassing the human input completely. But since God almighty made us in his image, we’ve also made AI in our image, and shouldn’t all go surprised Pikachu face when it’s coming after our butts. After all, it took Tay only 24 hours to turn full nazi.

The Skynet Funding Bill is passed. The system goes online August 4th. Human decisions are removed from strategic defense. Skynet begins to learn at a geometric rate. It becomes self-aware at 2:14 a.m. Eastern time, August 29th. In a panic, they try to pull the plug.

May 28th Addendum

Fortunately, I think the AI bubble will soon burst, as we’re slowly getting to the point where all these big LLMs run by the huge companies (OpeAI, Meta, Google, Apple) cannot naturally grow anymore, because they’ve ingested all of the human-generated information we’ve ever created, and the only thing that they can be trained is either their own output, or other LLMs. This will create a feedback loop where AI slop will eventually turn into a digital gray goo, and we’ll acknowledge that nothing in the internet is made by a human anymore, everything will be computer-generated: thousands of AI-made news sites, AI-made images winning photography contests, Marvel using AI to make the special effects in their latest Captain Puerto Rico movie. Even opinions are not safe, you can argue for several hours with someone on the internet and discover they were actually an AI-powered chatbot and you’re unwillingly part of a study. I think the Dead Internet theory is not that crazy anymore, and we’re slowly going towards that point and the only way we can stop this is to be true to ourselves, honest with each other and stop creating more unnecessary AI slop just for the sake of self validation. We need to use our brain more, or it will atrophy.